It’s That Blog Post. The release one, where we round up all the last of the features approved since the last time I blogged. If you’re new here, you’ll want to go check out these previous articles to learn more about what is/isn’t going into C23, many of them including examples, explanations, and some rationale:

The last meeting was pretty jam-packed, and a lot of things made it through at the 11th hour. We also lost quite a few good papers and features too, so they’ll have to be reintroduced next cycle, which might take us a whole extra 10 years to do. Some of us are agitating for a faster release cycle, mainly because we have 20+ years of existing practice we’ve effectively ignored and there’s a lot of work we should be doing to reduce that backlog significantly. It’s also just no fun waiting for __attribute__((cleanup(…))) (defer), statement expressions, better bitfields, wide pointers (a native pointer + size construct), a language-based generic function pointer type (GCC’s void(*)(void)) and like 20 other things for another 10 years when they’ve been around for decades.

But, I’ve digressed long enough: let’s not talk about the future, but the present. What’s in C23? Well, it’s everything (sans the typo-fixes the Project Editors - me ‘n’ another guy - have to do) present in N3047. Some of them pretty big blockbuster features for C (C++ will mostly quietly laugh, but that’s fine because C is not C++ and we take pride in what we can get done here, with our community.) The first huge thing that will drastically improve code is a combination-punch of papers written by Jens Gustedt and Alex Gilding.

N3006 + N3018 - constexpr for Object Definitions

Link.

I suppose most people did not see this one coming down the pipe for C. (Un?)Fortunately, C++ was extremely successful with constexpr and C implementations were cranking out larger and larger constant expression parsers for serious speed gains and to do more type checking and semantic analysis at compile-time. Sadly, despite many compilers getting powerful constant expression processors, standard C just continued to give everyone who ended up silently relying on those increasingly beefy and handsome compiler’s tricks a gigantic middle finger.

For example, in my last post about C (or just watching me post on Twitter), I explained how this:

const int n = 5 + 4;

int purrs[n];

// …

is some highly illegal contraband. This creates a Variable-Length Array (VLA), not a constant-sized array with size 9. What’s worse is that the Usual Compilers™ (GCC, Clang, ICC, MSVC, and most other optimizing compilers actually worth compiling with) were typically powerful enough to basically turn the code generation of this object – so long as you didn’t pass it to something expecting an actual Variably-Modified Types (also talked about in another post) – into working like a normal C array.

This left people relying on the fact that this was a C array, even though it never was. And it created enough confusion that we had to accept N2713 to add clarification to the Standard Library to tell people that No, Even If You Can Turn That Into A Constant Expression, You Cannot Treat It Like One For The Sake Of the Language. One way to force an error up-front if the compiler would potentially turn something into not-a-VLA behind-your-back is to do:

const int n = 5 + 4;

int purrs[n] = { 0 }; // 💥

// …

VLAs are not allowed to have initializers1, so adding one makes a compiler scream at you for daring to write one. Of course, if you’re one of those S P E E D junkies, this could waste precious cycles in potentially initializing your data to its 0 bit representation. So, there really was no way to win when dealing with const here, despite everyone’s mental model – thanks to the word’s origin in “constant” – latching onto n being a constant expression. Compilers “accidentally” supporting it by either not treating it as a VLA (and requiring the paper I linked to earlier to be added to C23 as a clarification), or treating it as a VLA but extension-ing and efficient-code-generating the problem away just resulted in one too many portability issues. So, in true C fashion, we added a 3rd way that was DEFINITELY unmistakable:

constexpr int n = 5 + 4;

int purrs[n] = { 0 }; // ✅

// …

The new constexpr keyword for C means you don’t have to guess at whether that is a constant expression, or hope your compiler’s optimizer or frontend is powerful enough to treat it like one to get the code generation you want if VLAs with other extensions are on-by-default. You are guaranteed that this object is a constant expression, and if it is not the compiler will loudly yell at you. While doing this, the wording for constant expressions was also improved dramatically, allowing:

- compound literals (with the

constexprstorage class specifier); - structures and unions with member access by

.; - and, the usual arithmetic / integer constant expressions,

to all be constant expressions now.

Oh No, Those Evil Committee People are Ruining™ my Favorite LanguageⓇ with C++ NonsenseⒸ!

Honestly? I kind of wish I could ruin C sometimes, but believe it or not: we can’t!

Note that there are no function calls included in this, so nobody has to flip out or worry that we’re going to go the C++ route of stacking on a thousand different “please make this function constexpr so I can commit compile-time crimes”. It’s just for objects right now. There is interest in doing this for functions, but unlike C++ the intent is to provide a level of constexpr functions that is so weak it’s worse than even the very first C++11 constexpr model, and substantially worse than what GCC, Clang, ICC, and MSVC can provide at compile-time right now in their C implementations.

This is to keep it easy to implement evaluation in smaller compilers and prevent feature-creep like the C++ feature. C is also protected from additional feature creep because, unlike C++, there’s no template system. What justified half of the improvements to constexpr functions in C++ was “well, if I just rewrite this function in my favorite Functional Language – C++ Templates! – and tax the compiler even harder, I can do exactly what I want with worse compile-time and far more object file bloat”. This was a scary consideration for many on the Committee, but we will not actually go that direction precisely because we are in the C language and not C++.

You cannot look sideways and squint and say “well, if I just write this in the most messed up way possible, I can compute a constant expression in this backdoor Turing complete functional language”; it just doesn’t exist in C. Therefore, there is no prior art or justification for an ever-growing selection of constant expression library functions or marked-up headers. Even if we get constexpr functions, it will be literally and intentionally be underpowered and weak. It will be so bad that the best you can do with it is write a non-garbage max function to use as the behind-the-scenes for a max macro with _Generic. Or, maybe replace a few macros with something small and tiny.

Some people will look at this and go: “Well. That’s crap. The reason I use constexpr in my C++-like-C is so I can write beefy compile-time functions to do lots of heavy computation once at a compile-time, and have it up-to-date with the build at the same time. I can really crunch a perfect hash or create a perfect table that is hardware-specific and tailored without needing to drop down to platform-specific tricks. If I can’t do that, then what good is this?” And it’s a good series of questions, dear reader. But, my response to this for most C programmers yearning for better is this:

we get what we shill for.

With C we do not ultimately have the collective will or implementers brave enough to take-to-task making a large constant expression parser, even if the C language is a lot simpler to write one for compared to C++. Every day we keep proclaiming C is a simple and beautiful language that doesn’t need features, even features that are compile-time only with no runtime overhead. That means, in the future, the only kind of constant functions on the table are ones with no recursion, only one single statement allowed in a function body, plus additional restrictions to get in your way. But that’s part of the appeal, right? The compilers may be weak, the code generation may be awful, most of the time you have to abandon actually working in C and instead just use it as a macro assembler and drop down to bespoke, hand-written platform-specific assembly nested in a god-awful compiler-version-specific #ifdef, but That’s The Close-To-The-Metal C I’m Talkin’ About, Babyyyyy!!

“C is simple” also means “the C standard is underpowered and cannot adequately express everything you need to get the job done”. But if you ask your vendor nicely and promise them money, cookies, and ice cream, maybe they’ll deign to hand you something nice. (But it will be outside the standard, so I hope you’re ready to put an expensive ring 💍 on your vendor’s finger and marry them.)

N3038 - Introduce Storage Classes for Compound Literals

Link.

Earlier, I sort of glazed over the fact that Compound Literals are now part of things that can be constant expressions. Well, this is the paper that enables such a thing! This is a feature that actually solves a problem C++ was having as well, while also fixing a lot of annoyances with C. For those of you in the dark and who haven’t caught up with C99, C has a feature called Compound Literals. It’s a way to create any type - usually, structures - that have a longer lifetime than normal and can act as a temporary going into a function. They’re used pretty frequently in code examples and stuff done by Andre Weissflog of sokol_gfx.h fame, who writes some pretty beautiful C code (excerpted from the link):

#define SOKOL_IMPL

#define SOKOL_GLCORE33

#include <sokol_gfx.h>

#define GLFW_INCLUDE_NONE

#include <GLFW/glfw3.h>

int main(int argc, char* argv[]) {

/* create window and GL context via GLFW */

glfwInit();

/* … CODE ELIDED … */

/* setup sokol_gfx */

sg_setup(&(sg_desc){0}); // ❗ Compound Literal

/* a vertex buffer */

const float vertices[] = {

// positions // colors

0.0f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f, 1.0f,

0.5f, -0.5f, 0.5f, 0.0f, 1.0f, 0.0f, 1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 1.0f

};

sg_buffer vbuf = sg_make_buffer(&(sg_buffer_desc){ // ❗ Compound Literal

.data = SG_RANGE(vertices)

});

/* a shader */

sg_shader shd = sg_make_shader(&(sg_shader_desc){ // ❗ Compound Literal

.vs.source =

"#version 330\n"

"layout(location=0) in vec4 position;\n"

"layout(location=1) in vec4 color0;\n"

"out vec4 color;\n"

"void main() {\n"

" gl_Position = position;\n"

" color = color0;\n"

"}\n",

.fs.source =

"#version 330\n"

"in vec4 color;\n"

"out vec4 frag_color;\n"

"void main() {\n"

" frag_color = color;\n"

"}\n"

});

/* … CODE ELIDED … */

return 0;

}

C++ doesn’t have them (though GCC, Clang, and a few other compilers support them out of necessity). There is a paper by Zhihao Yuan to support Compound Literal syntax in C++, but there was a hang up. Compound Literals have a special lifetime in C called “block scope” lifetime. That is, compound literals in functions behave as-if they are objects created in the enclosing scope, and therefore retain that lifetime. In C++, where we have destructors, unnamed/invisible C++ objects being l-values (objects whose address you can take) and having “Block Scope” lifetime (lifetime until where the next } was) resulted in the usual intuitive behavior of C++’s temporaries-passed-to-functions turning into a nightmare.

For C, this didn’t matter and - in many cases - the behavior was even relied on to have longer-lived “temporaries” that survived beyond the duration of a function call to, say, chain with other function calls in a macro expression. For C++, this meant that some types of RAII resource holders – like mutexen/locks, or just data holders like dynamic arrays – would hold onto the memory for way too long.

The conclusion from the latest conversation was “we can’t have compound literals, as they are, in C++, since C++ won’t take the semantics of how they work from the C standard in their implementation-defined extensions and none of the implementations want to change behavior”. Which is pretty crappy: taking an extension from C’s syntax and then kind of just… smearing over its semantics is a bit of a rotten thing to do, even if the new semantics are better for C++.

Nevertheless, Jens Gustedt’s paper saves us a lot of the trouble. While default, plain compound literals have “block scope” (C) or “temporary r-value scope” (C++), with the new storage-class specification feature, you can control that. Borrowing the sg_setup function above that takes the sg_desc structure type:

#include <sokol_gfx.h>

SOKOL_GFX_API_DECL void sg_setup(const sg_desc *desc);

we are going to add the static modifier, which means that the compound literal we create has static storage duration:

int main (int argc, const char* argv[]) {

/* … CODE ELIDED … */

/* setup sokol_gfx */

sg_setup(&(static sg_desc){0}); // ❗ Compound Literal

/* … CODE ELIDED … */

}

Similarly, auto, thread_local, and even constexpr can go there. constexpr is perhaps the most pertinent to people today, because right now using compound literals in initializers for const data is technically SUPER illegal:

typedef struct crime {

int criming;

} crime;

const crime crimes = (crime){ 11 }; // ❗ ILLEGAL!!

int main (int argc, char* argv[]) {

return crimes.criming;

}

It will work on a lot of compilers (unless warnings/errors are cranked up), but it’s similar to the VLA situation. The minute a compiler decides to get snooty and picky, they have all the justification in the world because the standard is on their side. With the new constexpr specifier, both structures and unions are considered constant expressions, and it can also be applied to compound literals as well:

typedef struct crime {

int criming;

} crime;

const crime crimes = (constexpr crime){ 11 }; // ✅ LEGAL BABYYYYY!

int main (int argc, char* argv[]) {

return crimes.criming;

}

Nice.

N3017 - #embed

Link.

Go read this to find out all about the feature and how much of a bloody pyrrhic victory it was.

N3033 - Comma Omission and Deletion (__VA_OPT__ in C and Preprocessor Wording Improvements)

Link.

This paper was a long time coming. C++ got it first, making it slightly hilarious that C harps on standardizing existing practice so much but C++ tends to beat it to the punch for features which solve long-standing Preprocessor shenanigans. If you’ve ever had to use __VA_ARGS__ in C, and you needed to pass 0 arguments to that …, or try to use a comma before the __VA_ARGS__, you know that things got genuinely messed up when that code had to be ported to other platforms. It got a special entry in GCC’s documentation because of how blech the situation ended up being:

… GNU CPP permits you to completely omit the variable arguments in this way. In the above examples, the compiler would complain, though since the expansion of the macro still has the extra comma after the format string.

To help solve this problem, CPP behaves specially for variable arguments used with the token paste operator, ‘

##’. If instead you write#define debug(format, …) fprintf (stderr, format, ## __VA_ARGS__)and if the variable arguments are omitted or empty, the ‘

##’ operator causes the preprocessor to remove the comma before it. If you do provide some variable arguments in your macro invocation, GNU CPP does not complain about the paste operation and instead places the variable arguments after the comma. …

This is solved by the use of the C++-developed __VA_OPT__, which expands out to a legal token sequence if and only if the arguments passed to the variadic … are not empty. So, the above could be rewritten as:

#define debug(format, …) fprintf (stderr, format __VA_OPT__(,) __VA_ARGS__)

This is safe and contains no extensions now. It also avoids any preprocessor undefined behavior. Furthermore, C23 allows you to pass nothing for the … argument, giving users a way out of the previous constraint violation and murky implementation behaviors. It works in both the case where you write debug("meow") and debug("meow", ) (with the empty argument passed explicitly). It’s a truly elegant design and we have Thomas Köppe to thank for bringing it to both C and C++ for us. This will allow a really nice standard behavior for macros, and is especially good for formatting macros that no longer need to do weird tricks to special-case for having no arguments.

Which, speaking of 0-argument … functions…

N2975 - Relax requirements for variadic parameter lists

Link.

This paper is pretty simple. It recognizes that there’s really no reason not to allow

void f(…);

to exist in C. C++ has it, and all the arguments get passed successfully, and nobody’s lost any sleep over it. It was also a important filler since, as talked about in old blog posts, we have finally taken the older function call style and put it down after 30+ years of being in existence as a feature that never got to see a single proper C standard release non-deprecated. This was great! Except, as that previous blog post mentions, we had no way of having a general-purpose Application Binary Interface (ABI)-defying function call anymore. That turned out to be bad enough that after the deprecation and removal we needed to push for a fix, and lucky for us void f(…); had not made it into standard C yet.

So, we put it in. No longer needing the first parameter, and no longer requiring it for va_start, meant we could provide a clean transition path for everyone relying on K&R functions to move to the …-based function calls. This means that mechanical upgrades of old codebases - with tools - is now on-the-table for migrating old code to C23-compatibility, while finally putting K&R function calls – and all their lack of safety – in the dirt. 30+ years, but we could finally capitalize on Dennis M. Ritchie’s dream here, and put these function calls to bed.

Of course, compilers that support both C and C++, and compilers that already had void f(…); functions as an extension, may have deployed an ABI that is incompatible with the old K&R declarations of void f();. This means that a mechanical upgrade will need to check with their vendors, and:

- make sure that this occupies the same calling convention;

- or, the person who is calling the function cannot update the other side that might be pulling assembly/using a different language,

then the upgrade that goes through to replace every void f(); may need to also add a vendor attribute to make sure the function calling convention is compatible with the old K&R one. Personally, I suggest:

[[vendor::kandr]] void f();

, or something similar. But, ABI exists outside the standard: you’ll need to talk to your vendor about that one when you’re ready to port to an exlusively-post-C23 world. (I doubt anyone will compile for an exclusively C23-and-above world, but it is nice to know there is a well-defined migration path for users still hook up a 30+ year deprecated feature). Astute readers may notice that if they don’t have a parameter to go off of, how do they commit stack-walking sins to get to the arguments? And, well, the answer is you still can: ztd.vargs has a proof-of-concept of that (on Windows). You still need some way to get the stack pointer in some cases, but that’s been something compilers have provided as an intrinsic for a while now (or something you could do by committing register crimes). In ztd.vargs, I had to drop down into assembly to start fishing for stuff more directly when I couldn’t commit more direct built-in compiler crimes. So, this is everyone’s chance to get really in touch with that bare-metal they keep bragging about for C. Polish off those dusty manuals and compiler docs, it’s time to get intimately familiar with all the sins the platform is doing on the down-low!

N3029 - Improved Normal Enumerations

Link.

What can I say about this paper, except…

What The Hell, Man?

It is absolutely bananas to me that in C – the systems programming language, the language where const int n = 5 is not a constant expression so people tell you to use enum { n = 5 } instead – just had this situation going on, since its inceptions. “16 bits is enough for everyone” is what Unicode said, and we paid for it by having UTF-16, a maximum limit of 21 bits for our Unicode code points (“Unicode scalar values” if you’re a nerd), and the entire C and C++ standard libraries with respect to text encoding just being completely impossible to use. (On top of the library not working for Big5-HKSCS as a multibyte/narrow encoding). So of course, when I finally sat down with the C standard and read through the thing, noticing that enumeration constants “must be representable by an int” was the exact wording in there was infuriating. 32 bits may be good, but there were plenty of platforms where int was still 16 bits. Worse, if you put code into a compiler where the value was too big, not only would you not get errors on most compilers, you’d sometimes just get straight up miscompiles. This is not because the compiler vendor is a jerk or bad at their job; the standard literally just phones it in, and every compiler from ICC to MSVC let you go past the low 16-bit limit and occasionally even exceed the 32-bit INT_MAX without so much as a warning. It was a worthless clause in the standard,

and it took a lot out of me to fight to correct this one.

The paper next in this blog post was seen as the fix, and we decided that the old code – the code where people used 0x10000 as a bit flag – was just going to be non-portable garbage. Did you go to a compiler where int is 16 bits and INT_MAX is smaller than 0x10000? Congratulations: your code was non-standard, you’re now in implementation-defined territory, pound sand! It took a lot of convincing, nearly got voted down the first time we took a serious poll on it (just barely scraped by with consensus), but the paper rolled in to C23 at the last meeting. A huge shout out to Aaron Ballman who described this paper as “value-preserving”, which went a really long way in connecting everyone’s understanding of how this was meant to work. It added a very explicit set of rules on how to do the computation of the enumeration constant’s value, so that it was large enough to handle constants like 0x10000 or ULLONG_MAX. It keeps it to be int wherever possible to preserve the semantics of old code, but if someone exceeds the size of int then it’s actually legal to upgrade the backing type now:

enum my_values {

a = 0, // 'int'

b = 1, // 'int'

c = 3, // 'int'

d = 0x1000, // 'int'

f = 0xFFFFF, // 'int' still

g, // implicit +1, on 16-bit platform upgrades type of the constant here

e = g + 24, // uses "current" type of g - 'long' or 'long long' - to do math and set value to 'e'

i = ULLONG_MAX // 'unsigned long' or 'unsigned long long' now

};

When the enumeration is completed (the closing brace), the implementation gets to select a single type that my_values is compatible with, and that’s the type used for all the enumerations here if int is not big enough to hold ULLONG_MAX. That means this next snippet:

int main (int argc, char* argv[]) {

// when enum is complete,

// it can select any type

// that it wants, so long as its

// big enough to represent the type

return _Generic(a,

unsigned long: 1,

unsigned long long: 0,

default: 3);

}

can still return any of 1, 0, or 3. But, at the very least, you know a, or g or i will never truncate or lose the value you put in as a constant expression, which was the goal. The type was always implementation-defined (see: -fshort-enum shenanigans of old). All of that old code that used to be wrong is now no longer wrong. All of those people who tried to write wrappers/shims for OpenGL who used enumerations for their integer-constants-with-nice-identifier-names are also now correct, so long as they are using C23. (This is also one reason why the OpenGL constants in some of the original OpenGL code are written as preprocessor defines (#define GL_ARB_WHATEVER …) and not enumerations. Enumerations would break with any of the OpenGL values above 0xFFFF on embedded platforms; they had to make the move to macros, otherwise it was busted.)

Suffice to say I’m extremely happy this paper got it and that we retroactively fixed a lot of code that was not supposed to be compiling on a lot of platforms, at all. The underlying type of an enumeration can still be some implementation-defined integer type, but that’s what this next paper is for…

N3030 - Enhanced Enumerations

Link.

This was the paper everyone was really after. It also got in, and rather than being about “value-preservation”, it was about type preservation. I could write a lot, but whitequark – as usual – describes it best:

i realized today that C is so bad at its job that it needs the help of C++ to make some features of its ABI usable (since you can specify the width of an enum in C++ but not C)

C getting dumpstered by C++ is a common occurrence, but honestly? For a feature like this? It is beyond unacceptable that C could not give a specific type for its enumerations, and therefore made the use of enumerations in e.g. bit fields or similar poisonous, bad, and non-portable. There’s already so much to contend with in C to write good close-to-the-hardware code: now we can’t even use enumerations portably without 5000 static checks special flags to make sure we got the right type for our enumerations? Utter hogwash and a blight on the whole C community that it took this long to fix the problem. But, as whitequark also stated:

in this case the solution to “C is bad at its job” is definitely to “fix C” because, even if you hate C so much you want to eradicate it completely from the face of the earth, we’ll still be stuck with the C ABI long after it’s gone

It was time to roll up my sleeves and do what I always did: take these abominable programming languages to task for their inexcusably poor behavior. The worst part is, I almost let this paper slip by because someone else – Clive Pygott – was handling it. In fact, Clive was handling this even before Catherine made the tweet; N2008, from…

oh my god, it’s from 2016.

I had not realized Clive had been working on it this long until, during one meeting, Clive – when asked about the status of an updated version of this paper – said (paraphrasing) “yeah, I’m not carrying this paper forward anymore, I’m tired, thanks”.

…

That’s not, uh, good. I quickly snapped up in my chair, slammed the Mute-Off button, and nearly fumbled the mechanical mute on my microphone as I sputtered a little so I could speak up: “hey, uh, Clive, could you forward me all the feedback for that paper? There’s a lot of people that want this feature, and it’s really important to them, so send me all the feedback and I’ll see if I can do something”. True to Clive’s word, minutes after the final day on the mid-2021 meeting, he sent me all the notes. And it was…

… a lot.

I didn’t realize Clive had this much push back. It was late 2021. 2022 was around the corner, we were basically out of time to workshop stuff. I frequently went to twitter and ranted about enumerations, from October 2021 and onward. The worst part is, most people didn’t know, so they just assumed I was cracked up about something until I pointed them to the words in the standard and then revealed all the non-standard behavior. Truly, the C specification for enumerations was something awful.

Of course, no matter how much I fumed, anger is useless without direction.

I honed that virulent ranting into a weapon: two papers, that eventually became what you’re reading about now. N3029 and N3030 was the crystallization of how much I hated this part of C, hated it’s specification, loathed the way the Committee worked, and despised a process that led us for over 30 years to end up in this exact spot. This man – Clive – had been at this since 2016. It’s 2022. 5 years in, he gave up trying to placate all the feedback, and that left me only 1 year to clean this stuff up.

Honestly, if I didn’t have a weird righteous anger, the paper would’ve never made it.

Never underestimate the power of anger. A lot of folk and many cultures spend time trying to get you to “manage your emotions” and “find serenity”, often to the complete exclusion of getting mad at things. You wanna know what I think?

🖕 ““Serenity””

Serenity, peace, all of that can be taken and shoved where the sun don’t shine. We were delivered a hot garbage language, made Clive Pygott – one of the smartest people working on the C Memory Model – gargle Committee feedback for 5 years, get stuck in a rocky specification, and ultimately abandon the effort. Then, we had to do some heroic editing and WAY too much time of 3 people – Robert Seacord, Jens Gustedt, and Joseph Myers – just to hammer it into shape while I had to drag that thing kicking and screaming across the finish line. Even I can’t keep that up for a long time, especially with all the work I also had to do with #embed and Modern Bit Utilities and 10+ other proposals I was fighting to fix. “Angry” is quite frankly not a strong enough word to describe a process that can make something so necessary spin its wheels for 5 years. It’s absolutely bananas this is how ISO-based, Committee-based work has to be done. To all the other languages eyeing the mantle of C and C++, thinking that an actual under-ISO working group will provide anything to them.

Do. Not.

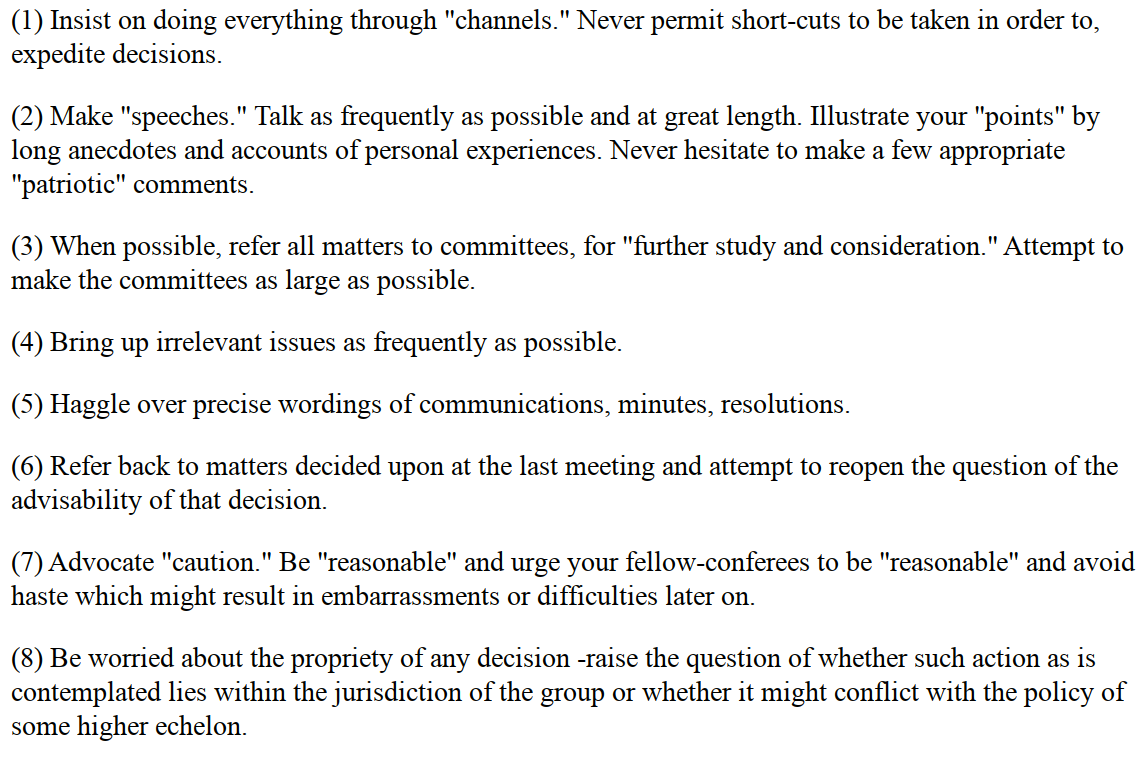

Nothing about ISO or IEC or its various subcommittees incentivizes progress. It incentivizes endless feedback loops, heavy weighted processes, individual burn out, and low return-on-investment. Do anything – literally anything – else with your time. If you need the ISO sticker because you want ASIL A/B/C/D certification for your language, than by all means: figure out a way to make it work. But keep your core process, your core feedback, your core identity out of ISO. You can standardize existing practices way better than this, and without nearly this much gnashing of teeth and pullback. No matter how politely its structured, the policies of ISO and the way it expects Committees to be structured is a deeply-embedded form of bureaucratic violence against the least of these, its contributors, and you deserve better than this. So much of this CIA sabotage field manual’s list:

should not have a directly-applicable analogue that describes how an International Standards Organization conducts business. But if it is what it is, then it’s time to roll up the sleeves. Don’t be sad. Get mad. Get even.

Anyways, enumerations. You can add types to them:

enum e : unsigned short {

x

};

int main (int argc, char* argv[]) {

return _Generic(x, unsigned short: 0, default: 1);

}

Unlike before, this will always return 0 on every platform, no exceptions. You can stick it in structures and unions and use it with bitfields and as long as your implementation is not completely off its rocker, you will get entirely dependable alignment, padding, and sizing behavior. Enjoy! 🎉

N3020 - Qualifier-preserving Standard Functions

Link.

This is a relatively simple paper, but closes up a hole that’s existed for a while. Nominally, it’s undefined-behavior to modify an originally-const array – especially a string literal – through a non-const pointer. So,

why exactly was strchr, bsearch, strpbrk, strrchr, strstr, memchr, and their wide counterparts basically taking const in and stripping it out in the return value?

The reason is because these had to be singular functions that defined a single externally-visible function call. There’s no overloading in C, so back in the old days when these functions were cooked up, you could only have one. We could not exclude people who wanted to write into the returned pointers of these functions, so we made the optimal (at the time) choice of simply removing the const from the return values. This was not ideal, but it got us through the door.

Now, with type-generic macros in the table, we do not have this limitation. It was just a matter of someone getting inventive enough and writing the specification up for it, and that’s exactly what Alex Gilding did! It looks a little funny in the standardese, but:

#include <string.h>

QChar *strchr(QChar *s, int c);

Describes that if you pass in a const-qualified char, you get back a const-qualified char. Similarly if there is no const. It’s a nice little addition that can help improve read-only memory safety. It might mean that people using any one of the aforementioned functions as a free-and-clear “UB-cast” to trick the compiler will have to fess up and use a real cast instead.

N3042 - Introduce the nullptr constant

Link.

To me, this one was a bit obviously in need, though not everyone thinks so. For a long time, people liked using NULL, (void*)0, and literal 0 as the null pointer constant. And they are certainly not wrong to do so: the first one in that list is a preprocessor macro resolving to either of the other 2. While nominally it would be nice if it resolved to the first, compatibility for older C library implementations and the code built on top of it demands that we not change NULL. Of course, this made for some interesting problems in portability:

#include <stdio.h>

int main (int argc, char* argv[]) {

printf("ptr: %p", NULL); // oops

return 0;

}

Now, nobody’s passing NULL directly to printf(…), but in a roundabout way we had NULL - the macro itself - filtering down into function calls with variadic arguments. Or, more critically, we had people just passing straight up literal 0. “It’s the null pointer constant, that’s perfectly fine to pass to something expecting a pointer, right?” This was, of course, wrong. It would be nice if this was true, but it wasn’t, and on certain ABIs that had consequences. The same registers and stack locations for passing a pointer were not always the same as were used for literal 0 or - worse - they were the same, but the literal 0 didn’t fill in all the expected space of the register (32-bit vs. 64-bit, for example). That meant people doing printf("%p", 0); in many ways were relying purely on the luck of their implementation that it wasn’t producing actual undefined behavior! Whoops.

nullptr and the associated nullptr_t type in <stddef.h> fixes that problem. You can specify nullptr, and it’s required to have the same underlying representation as the null pointer constant in char* or void* form. This means it will always be passed correctly, for all ABIs, and you won’t read garbage bits. It also aids in the case of _Generic: with NULL being implementation-defined, you could end up with void* or 0. With nullptr, you get exactly nullptr_t: this means you don’t need to lose the _Generic slot for both int or void*, especially if you’re expecting actual void* pointers that point to stuff. Small addition, gets rid of some Undefined Behavior cases, nice change.

Someone recently challenged me, however: they said this change is not necessary and bollocks, and we should simply force everyone to define NULL to be void*. I said that if they’d like that, then they should go to those vendors themselves and ask them to change and see how it goes. They said they would, and they’d like a list of vendors defining NULL to be 0. Problem: quite a few of them are proprietary, so here’s my Open Challenge:

if you (yes, you!!) have got a C standard library (or shim/replacement) where you define NULL to be 0 and not the void-pointer version, send me a mail and I’ll get this person in touch with you so you can duke it out with each other. If they manage to convince enough vendors/maintainers, I’ll convince the National Body I’m with to write a National Body Comment asking for nullptr to be rescinded. Of course, they’ll need to not only reach out to these people, but convince them to change their NULL from 0 to ((void*)0), which. Well.

Good luck to the person who signed up for this.

N3022 - Modern Bit Utilities

Link.

Remember how there were all those instructions available since like 1978 – you know, in the Before Times™, before I was even born and my parents were still young? – and how we had easy access to them through all our C compilers because we quickly standardized existing practice from last century?

… Yeah, I don’t remember us doing that either.

Modern Bit Utilities isn’t so much “modern” as “catching up to 40-50 years ago”. There were some specification problems and I spent way too much time fighting on so many fronts that, eventually, something had to suffer: although the paper provides wording for Rotate Left/Right, 8-bit Endian-Aware Loads/Stores, and 8-bit Memory Reversal (fancy way of saying, “byteswap”), the specification had too many tiny issues in it that opposition mounted to prevent it from being included-and-then-fixed-up-during-the-C23-commenting-period, or just included at all. I was also too tired by the last meeting day, Friday, to actually try to fight hard for it, so even though a few other members of WG14 sacrificed 30 minutes of their block to get Rotate Left/Right in, others insisted that they wanted to do the Rotate Left/Right functions in a different style. I was too tired to fight too hard over it, so I decided to just defer it to post-C23 and come back later.

Sorry.

Still, with the new <stdbit.h>, this paper provides:

- Endian macros (

__STDC_ENDIAN_BIG__,__STDC_ENDIAN_LITTLE__,__STDC_ENDIAN_NATIVE__) stdc_popcountstdc_leading_zeroes/stdc_leading_ones/stdc_trailing_zeros/stdc_trailing_onesstdc_first_leading_zero/stdc_first_leading_one/stdc_first_trailing_zero/stdc_first_trailing_onestdc_has_single_bitstdc_bit_widthstdc_bit_ceilstdc_bit_floor

“Where’s the endian macros for Honeywell architectures or PDP endianness?” You can get that if __STDC_ENDIAN_NATIVE__ isn’t equal to either the little OR the big macro:

#include <stdbit.h>

#include <stdio.h>

int main (int argc, char* argv[]) {

if (__STDC_ENDIAN_NATIVE__ == __STDC_ENDIAN_LITTLE__) {

printf("little endian! uwu\n");

}

else if (__STDC_ENDIAN_NATIVE__ == __STDC_ENDIAN_BIG__) {

printf("big endian OwO!\n");

}

else {

printf("what is this?!\n");

}

return 0;

}

If you fall into the last branch, you have some weird endianness. We do not provide a macro for that name because there is too much confusion around what the exact proper byte order for “PDP Endian” or “Honeywell Endian” or “Bi Endian” would end up being.

“What’s that ugly stdc_ prefix?”

For the bit functions, a prefix was added to them in the form of stdc_…. Why?

popcount is a really popular function name. If the standard were to take it, we’d effectively be loading up a gun to shoot a ton of existing codebases right in the face. The only proper resolution I could get to the problem was adding stdc_ in front. It’s not ideal, but honestly it’s the best I could do on short notice. We do not have namespaces in C, which means any time we add functionality we basically have to square off with users. It’s most certainly not a fun part of proposal development, for sure: thus, we get a stdc_ prefix. Perhaps it will be the first of many functions to use such prefixes so we do not have to step on user’s toes, but I imagine for enhancements and fixes to existing functionality, we will keep writing function names by the old rules. This will be decided later by a policy paper, but that policy paper only applies to papers after C23 (and after we get to have that discussion).

N3006 + N3007 - Type Inference for object definitions

Link.

This is a pretty simple paper, all things considered. If you ever used __auto_type from GCC: this is that, with the name auto. I describe it like this because it’s explicitly not like C++’s auto feature: it’s significantly weaker and far more limited. Whereas C++’s auto allows you to declare multiple variables on the same line and even deduce partial qualifiers / types with it (such as auto* ptr1 = thing, *ptr2 = other_thing; to demand that thing and other_thing are some kind of pointer or convertible to one), the C version of auto is modeled pretty directly after the weaker version of __auto_type. You can only declare one variable at a time. There’s no pointer-capturing. And so on, and so forth:

int main (int argc, char* argv[]) {

auto a = 1;

return a; // returns int, no mismatches

}

It’s most useful in macro expressions, where you can avoid having to duplicate expressions with:

#define F(_NAME, ARG, ARG2, ARG3) \

typeof(ARG + (ARG2 || ARG3)) _NAME = ARG + (ARG2 | ARG3);

int main (int argc, char* argv[]) {

F(a, 1, 2, 3);

return a;

}

instead being written as:

#define F(_NAME, ARG, ARG2, ARG3) \

auto _NAME = ARG + (ARG2 | ARG3);

int main (int argc, char* argv[]) {

F(a, 1, 2, 3);

return a;

}

Being less prone to make subtle or small errors that may not be caught by the compiler you’re using is good, when it comes to working with specific expressions. (You’ll notice the left hand side of the _NAME definition in the first version had a subtle typo. If you did: congratulations! If you didn’t: well, auto is for you.) Expressions in macros can get exceedingly complicated, and worse if there are unnamed structs or similar being used it can be hard-to-impossible to name them. auto makes it possible to grasp these types and use them properly, resulting in a smoother experience.

Despite being a simple feature, I expect this will be one of the most divisive for C programmers. People already took to the streets in a few places to declare C a dead language, permanently ruined by this change. And, as a Committee member, if that actually ends up being the case? If this actually ends up completely destroying C for any of the reasons people have against auto and type inference for a language that quite literally just let you completely elide types in function calls and gave you “implicit int” behavior that compilers today still have to support so that things like OpenSSL can still compile?2

Don’t threaten me with a good time, now.

N2897 - memset_explicit

Link.

memset_explicit is memset_s from Annex K, without the Annex K history/baggage. It serves functionally the same purpose, too. It took a lot (perhaps too much) discussion, but Miguel Ojeda pursued it all the way to the end. So, now we have a standard, mandated, always-present memset_explicit that can be used in security-sensitive contexts, provided your compiler and standard library implementers work together to not Be Evil™.

Hoorah! 🎉

N2888 - Exact-width Integer Types May Exceed (u)intmax_t

Link.

The writing has been on the wall for well over a decade now; intmax_t and uintmax_t have been inadequate for the entire industry over and has been consistently limiting the evolution of C’s integer types year over year, and affecting downstream languages. While we cannot exempt every single integer type from the trappings of intmax_t and uintmax_t, we can at least bless the intN_t types and uintN_t types so they can go beyond what the two max types handle. There is active work in this area to allow us to transition to a better ABI and let these two types live up to their promises, but for now the least we could do is let the vector extensions and extended compiler modes for uint128_t, uint256_t, uint512_t, etc. etc. all get some time in the sun and out of the (u)intmax_t shadow.

This doesn’t help for the preprocessor, though, since you are still stuck with the maximum value that intmax_t and uintmax_t can handle. Integer literals and expressions will still be stuck dealing with this problem, but at the very least there should be some small amount of portability between the Beefy Machines™ and the presence of the newer UINT128_WIDTH and such macros.

Not the best we can do, but progress in the right direction! 🎉

And That’s All I’m Writing About For Now

Note that I did not say “that is it”: there’s quite a few more features that made it in, just my hands are tired and there’s a lot of papers that were accepted. I also do not feel like there are some I can do great justice with, and quite frankly the papers themselves make better explanations than I do. Particularly, N2956 - unsequenced functions is a really interesting paper that can enable some intense optimizations with user attribute markup. Its performance improves can also be applied locally:

#include <math.h>

#include <fenv.h>

inline double distance (double const x[static 2]) [[reproducible]] {

#pragma FP_CONTRACT OFF

#pragma FENV_ROUND FE_TONEAREST

// We assert that sqrt will not be called with invalid arguments

// and the result only depends on the argument value.

extern typeof(sqrt) [[unsequenced]] sqrt;

return sqrt(x[0]*x[0] + x[1]*x[1]);

}

I’ll leave the paper to explain how exactly that’s supposed to work, though! On top of that, we also removed Trigraphs??! (N2940) from C, and we made it so the _BitInt feature can be used with bit fields (N2969, nice). (If you don’t know what Trigraphs are, consider yourself blessed.)

Another really consequential paper is the Tag Compatibility paper by Martin Uecker, N3037. It makes for defining generic data structures through macros a lot easier, and does not require a pre-declaration in order to use it nicely. A lot of people were thrilled about this one and picked up on the improvement immediately: it helps us get one step closer to maybe having room to start shipping some cool container libraries in the future. You should be on the lookout for when compilers implement this, and rush off to the races to start developing nicer generic container libraries for C in conjunction with all the new features we put in!

There is also a lot of functionality that did not make it, such as Unicode Functions, defer, Lambdas/Blocks/Nested Functions, wide function pointers, constexpr functions, the byteswap and other low-level bit functionality I spoke of before, statement expressions, additional macro functionality, break break (or something like it), size_t literals, __supports_literal, Transparent Aliases, and more.

But For Now?

My work is done. I’ve got to go take a break and relax. You can find the latest draft copy of the Committee Draft Standard N3047 here. It’s probably filled with typos and other mistakes; I’m not a great project editor, honestly, but I do try, and I guess that’s all I can do for all of us. That’s it for me and C for the whole year. So now, 3

it’s sleepy time. Nighty night, and thanks for coming on this wild ride with me 💚4.

Footnotes

-

except for

{}, which is a valid initializer as of C23 thanks to a different paper I wrote. This was meant to properly 0 out VLA data without requiringmemset, and it safer because it includes no elements-to-initialize in its list (which means a VLA of size-0 or a VLA that is “too small” to fit the initializer need not become some kind of weird undefined behavior / “implementation-defined constraint violation” sort of deal). ↩ -

“Heh” Anya-style Art by Stratica from Twitter. ↩

-

Animorph Posting by Aria Beingessner, using art drawn by lilwilbug for me! ↩

-

Title photo by Terje Sollie from Pexels. ↩